Deep learning—a first meta-survey of selected reviews across scientific disciplines, their commonalities, challenges and research impact

- Published

- Accepted

- Received

- Academic Editor

- Catherine Myers

- Subject Areas

- Bioinformatics, Artificial Intelligence, Computer Vision, Emerging Technologies, Natural Language and Speech

- Keywords

- Deep learning, Artificial neural networks, Machine learning, Data analysis, Image analysis, Language processing, Speech recognition, Big data, Medical image analysis, Meta-review

- Copyright

- © 2021 Egger et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2021. Deep learning—a first meta-survey of selected reviews across scientific disciplines, their commonalities, challenges and research impact. PeerJ Computer Science 7:e773 https://doi.org/10.7717/peerj-cs.773

Abstract

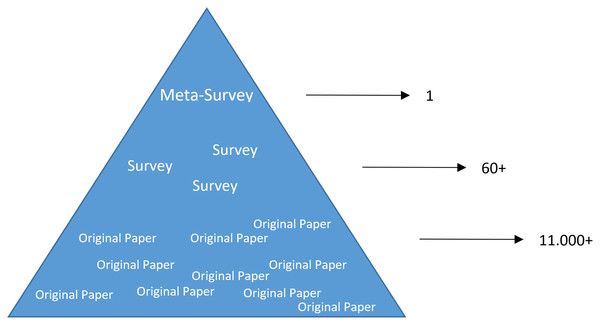

Deep learning belongs to the field of artificial intelligence, where machines perform tasks that typically require some kind of human intelligence. Deep learning tries to achieve this by drawing inspiration from the learning of a human brain. Similar to the basic structure of a brain, which consists of (billions of) neurons and connections between them, a deep learning algorithm consists of an artificial neural network, which resembles the biological brain structure. Mimicking the learning process of humans with their senses, deep learning networks are fed with (sensory) data, like texts, images, videos or sounds. These networks outperform the state-of-the-art methods in different tasks and, because of this, the whole field saw an exponential growth during the last years. This growth resulted in way over 10,000 publications per year in the last years. For example, the search engine PubMed alone, which covers only a sub-set of all publications in the medical field, provides already over 11,000 results in Q3 2020 for the search term ‘deep learning’, and around 90% of these results are from the last three years. Consequently, a complete overview over the field of deep learning is already impossible to obtain and, in the near future, it will potentially become difficult to obtain an overview over a subfield. However, there are several review articles about deep learning, which are focused on specific scientific fields or applications, for example deep learning advances in computer vision or in specific tasks like object detection. With these surveys as a foundation, the aim of this contribution is to provide a first high-level, categorized meta-survey of selected reviews on deep learning across different scientific disciplines and outline the research impact that they already have during a short period of time. The categories (computer vision, language processing, medical informatics and additional works) have been chosen according to the underlying data sources (image, language, medical, mixed). In addition, we review the common architectures, methods, pros, cons, evaluations, challenges and future directions for every sub-category.

Introduction

Deep learning belongs to the field of artificial intelligence, where machines execute tasks that usually require human intelligence. Deep learning is trying to achieve this by mimicking the learning of a human brain. Imitating the physiological structure of a brain, which consists of billions of neurons and connections between them, a deep learning algorithm consists of an artificial neural network of interconnected neurons (McCulloch & Pitts, 1990; LeCun, Bengio & Hinton, 2015). Also, similarly to the learning process of humans with their senses, deep neural networks are fed with sensory or sensor data like texts, images, videos or sounds (Ravì et al., 2016). These networks outperform the state-of-the-art methods in different tasks and, thanks to this, the whole field saw an exponential growth (Wang et al., 2018a; Gibson et al., 2018; Pepe et al., 2020). This resulted in way over 10,000 publications per year, in the last years. For example, alone the search engine PubMed (https://pubmed.ncbi.nlm.nih.gov/), which covers only a sub-set of all publications in the medical field, returns over 11,000 results for the search term deep learning in Q3 2020, and around 90% of these publications are from the last 3 years only. Consequently, a complete overview over the field of deep learning is already impossible to obtain and, in the near future, it will probably become difficult even for single sub-fields. However, there are several review or survey articles about deep learning, which focus on specific scientific fields or applications, for example, covering only deep learning approaches from computer vision (Voulodimos et al., 2018; Guo et al., 2016), or specific tasks like object detection (Liu et al., 2020; Zhao et al., 2019; Jiao et al., 2019) or object segmentation (Garcia-Garcia et al., 2018; Minaee et al., 2020). With these surveys as foundation, the aim of this contribution is to provide a first categorized and high-level meta-survey of selected works of deep learning reviews or surveys. On the top level, four main categories have been chosen for this contribution, namely: computer vision, (natural) language processing, medical informatics and additional works. The reasons behind this course of action was the underlying characteristics of data sources and to have about the same number of reviews for every main category with a well-balanced distribution. Although the last category could be further divided, this would lead to main categories with a small number of reviews; even only one review for some niche fields. Table 1 gives an overview of the four main categories and the number of screened reviews for each of them. Further, it presents the sum of the overall references and citations per category, to provide an impression of how comprehensive and influential the fields are. The subsequent tables from the single categories present more details for each of the main categories. The tables present the sub-categories and the corresponding publications, and again, also the number of references and citations for each of these sub-categories. Hence, these selected works of deep learning reviews or surveys across scientific disciplines depict the research impact they already had within a relatively short time. Note that the deep learning reviews selected for this contribution present themselves mostly an overview of (selected) deep learning works in a specific field and categorize them in sub-sections or areas. Therefore, this course of action is also applied to this meta-survey. The reason for this is that deep learning algorithms have often been applied to completely different datasets and modalities, which makes it difficult to combine them in a systematic survey as it can be seen in the referenced reviews.

| Categories | Number of publications | Years | Number of references | Citations (until August 2020) | Preprints |

|---|---|---|---|---|---|

| Computer vision | 18 | 2016–2020* | 3,624 | 3,923 | Yes |

| Language processing | 14 | 2016–2020 | 2,109 | 2,490 | Yes |

| Medical informatics | 12 | 2016–2020 | 2,210 | 6,722 | No |

| Additional works | 17 | 2016–2020 | 3,481 | 4,171 | Yes |

| Sum | 61 | – | 11,424 | 17,306 | – |

Note:

Search strategy

For this meta-survey a search in IEEE Xplore Digital Library, Scopus, DBLP, PubMed, Web of Science and Google Scholar for the keyword ‘Deep Learning’ together with any keyword between ‘Review’, ‘Survey’ was performed. Based on titles and abstracts, all records, which were not actual review or survey contributions, were excluded. This ultimately resulted in a total number of 61 review or survey publications about deep learning, which will be covered within this meta-survey. Summarized, this high-level meta-survey gives a snapshot overview of published deep learning reviews (status as of August 2020) and a compact overview of the search results can be found in the subsequent Tables of the corresponding sections of this contribution. Note that this meta-survey includes a few selected preprints. However, some of these have already up to one hundred or even several hundreds of citations, and hence have proven to be of high interest for the community and it can be expected that they will be published in a peer-reviewed venue sooner or later. These reviews were included as they cover specific and interesting research areas that have not been covered elsewhere yet.

Manuscript outline

The core of this meta-survey explores exclusively reviews and surveys on deep learning. Because some of the included reviews cover up to several hundred publications themselves, only high-level summaries and excerpts are given to keep the manuscript concise for the reader. Hence, every review publication is summarized in around 100 to 200 words and, thus, every sub-category has around 100 to up to a few hundred words, depending on the amount of review contributions in this area. The classification and arrangement of the presented deep learning reviews should enable the interested reader to dive deeper into specific categories and sub-categories by pointing to the associated publications. We also mention already reviewed deep learning architectures, like CNNs. However, all architectures, methods, etc., will be outlined in detail within the next sections, called: Going deeper: common architectures, methods, evaluations, pros, cons, challenges and future directions of the categories. Hence, the second parts of this first section also outline the fundamental deep learning concepts. Summarized, the following main sections of this meta-survey are organized as follows: Section two introduces the deep learning reviews or surveys on a high (meta) level divided into four main categories: computer vision, language processing, medical informatics and additional works, and presents the common architectures, methods, pros, cons, evaluations, challenges and future directions for every sub-category. Section “Conclusion and Discussion” concludes and discusses the contributions and outlines areas of future directions, also on a high (meta) level.

Furthermore, for readers with a particular interest towards the medical field, a systematic meta-survey about medical deep learning surveys, which are only partially covered within this contribution, is also available (Egger et al., 2020).

Fundamental deep learning concepts

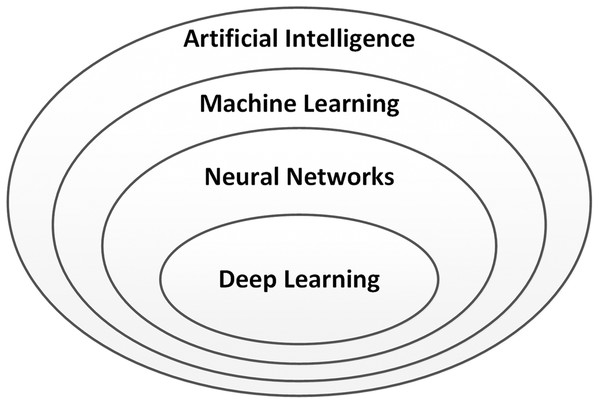

Deep learning (Sze et al., 2017; Goodfellow et al., 2016) is an important part of the discipline of artificial intelligence (AI), which was coined by John McCarthy. Figure 1 shows the relationship of deep learning to the whole field of artificial intelligence.

Figure 1: Relationship between deep learning and artificial intelligence.

As one of the main subfields of AI, the study of machine learning, which was defined by Arthur Samuel in 1959, makes it possible for machines to learn certain patterns in data, without being further taught or programmed by humans. This process is also referred to as training. As a result, the machines can complete many tasks without hand-crafted approaches created by humans. Within the field of machine learning, neural networks, as the name suggests, aim to emulate how a human brain works, even though only in a highly abstracted way. Like the real brain, the neural networks comprise mainly neurons and synapses, which are usually called artificial neurons and connections.

Neuron and neural network

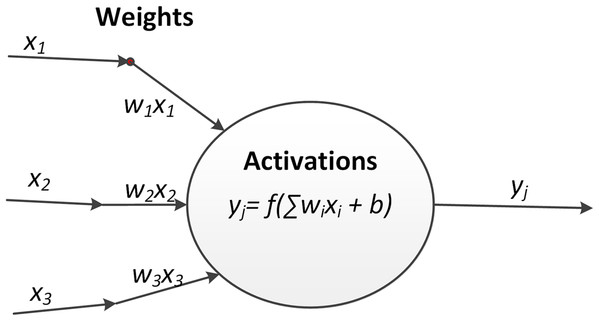

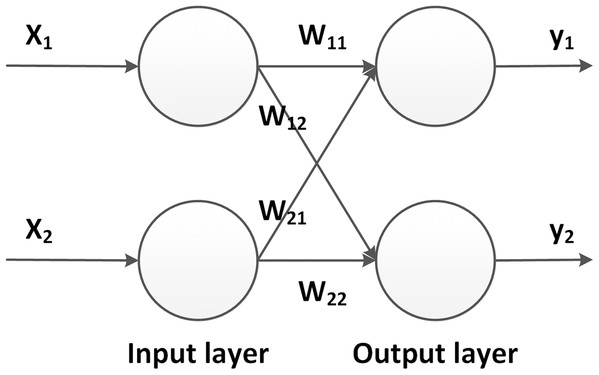

As shown in Fig. 2, a neuron in machine learning is simply a mathematical function. The inputs are multiplied by weights w and summed together. Additionally, a bias b may be added. This weighted sum is then passed to a function f, which is usually non linear and the output of the neuron. During the forward propagation of data through a neural network, this procedure is applied to each neuron:

Figure 2: The outputs of neuron related to the inputs.

(1) where xi and yj are the inputs and output of the neuron and wij and bj are the weights and bias terms. At the beginning of the training process these terms are typically randomly initialized. The input netj represents the weighted sum of outputs from previous neurons. A common activation functions is the logistic Sigmoid function:

(2)

It can also be proven that the derivative of Eq. (2) function can be simply computed as:

(3)

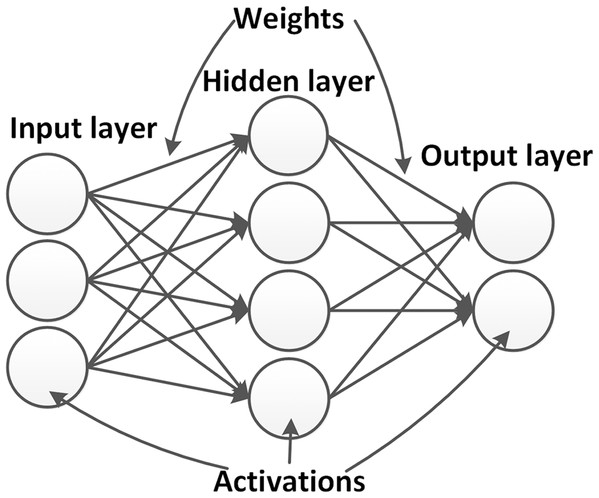

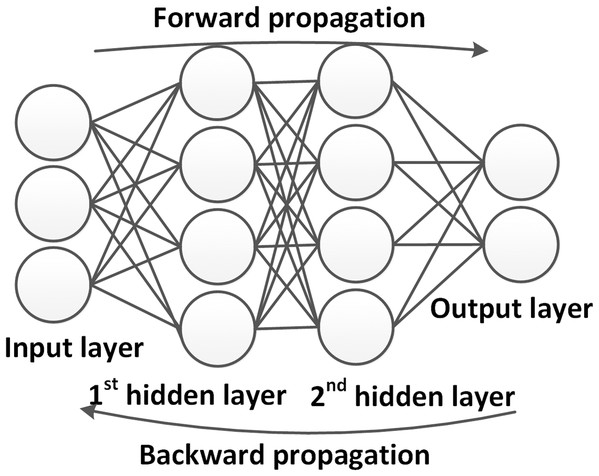

Figure 3 shows an example of a neural network architecture in its simplest form. Within the neural network, neurons are arranged in layers. A network always has an input and output layer, whose configurations are determined by the dimensions of input and output data. Between them, a number of hidden layers is introduced, which, during the training process, are used to model the relationship between the input and output. In so-called deep neural networks (DNNs), a high number of hidden layers are introduced, which is the reason why DNNs are usually capable of learning features of high complexity. The propagation of the inputs to the output layer is called the forward propagation.

Figure 3: Example of a simple neural networks.

The network contains one input layer, one hidden layer and one output layer. Activations (neurons) in different layers are connected by weights.During the training process, the architecture of neural networks remains unchanged, while the weights of connections and the biases of neurons are adapted depending on the difference between network outputs and real data. In the field of computer vision, for example, the inputs can be pixels of images, while the outputs can be labels for the entire images (image classification) or labels for the individual pixels (image segmentation).

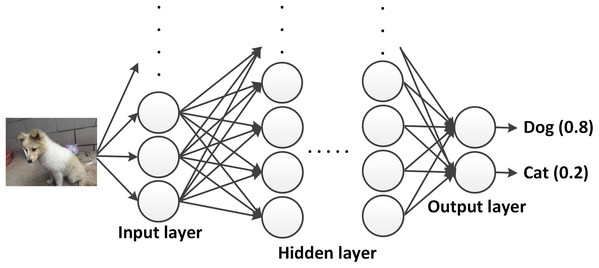

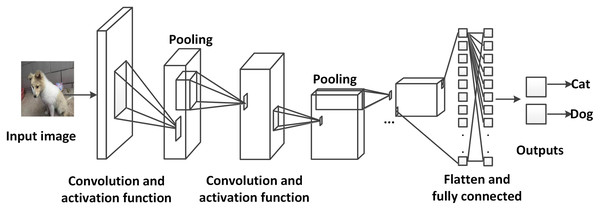

An example for the forward propagation using DNNs is shown in Fig. 4. An image is used as an input to the network, where each pixel is weighted and propagated through the hidden layers. Each output activation gives a value, which is the probability of the shown animal to belong to a specific class or label. The highest value determines the most likely answer. The difference between the network output and the desired output is called the loss. The goal of the training process is to minimize the loss by means of changing the weights and biases, for which two main schemes are introduced in the next subsection.

Figure 4: Example of a DNN.

The image data is provided to the input layer, weighted and propagated to further hidden layers. The output layer provides the prediction about what kind of animal (dog or cat) is shown in the input image (photographer source credit: Yuan Jin).Backpropagation and gradient descent

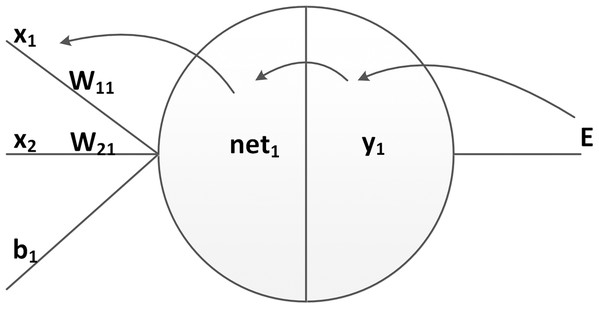

In this section, two basic concepts for the training of deep learning models are introduced, namely backpropagation and gradient descent. For the reason of simplification, a simple network, as shown in Fig. 5, is introduced. It consists of only one input and one output layer, without any hidden layer. After the forward propagation, the outputs are calculated and compared with the correct values. The motivation during the training process is to update the model parameters such that the output moves closer to the truth. To this end, the current error or loss of the network is passed reversely through the model, which is why this step is called backward propagation or backpropagation.

Figure 5: Simplified neural network.

The first step in the backpropagation is to calculate the output loss. There is a large range of loss functions that are commonly used in deep networks, depending on the application. As an example, we show the usage of a squared error loss function:

(4)

Here, ti are the expected outputs, yi the actual outputs of output neurons, and E is the resulting overall error or loss. The coefficient of 1/2 is added to offset the derivative exponent.

During error backpropagation, the gradient of the loss function with respect to the network parameters is calculated. As shown in Fig. 6, the partial derivative of the loss relative to each weight can be computed by using the chain rule twice:

Figure 6: The decomposition of a partial derivation.

(5)

It is assumed that this neuron is the output neuron, which means the output of neuron oj is the same as the output of neural network yj. Under the assumption of a logistic activation function and a squared error loss, the three partial derivatives on the right-hand side in Eq. (5) are computable using:

(6)

(7)

(8)

Note that in Eq. (5), only one term in the sum netj depends on wij, which leads to Eq. (8).

If this neuron is in an inner layer of the network, the calculation of the derivative of E with respect to output y is calculated. Considering E as a function of all neurons L = u,v,…,w receiving their input from neuron j, Eq. (6) can be changed to:

(9)

A recursive expression for the derivative is obtained:

(10)

Then, the derivative with respect to oj can be calculated if all the derivatives with respect to the outputs ol of the next layer in the network are computed. A general solution to the derivative in Eq. (5) can be generated with Eqs. (6)–(8) and (10):

(11) with

(12)

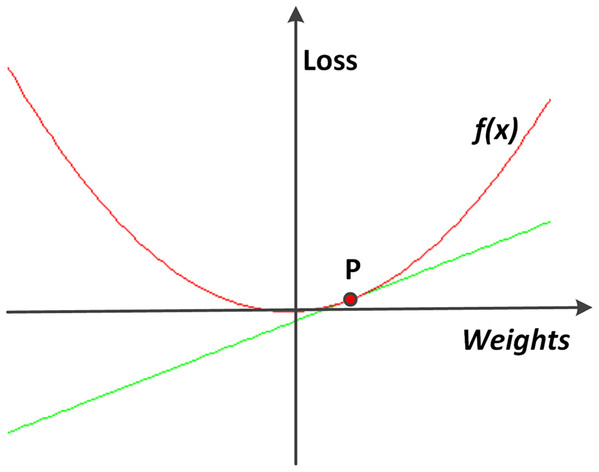

After all derivatives of the loss with respect to the network parameters, and consequently, the gradient of the error function, have been calculated, the network parameters are updated using the gradient descent method. The basic idea of gradient descent is to move to the opposite direction of the gradient, to find its (local) minimum.

Figure 7 shows a simplified example of using a derivative to find the direction of the gradient descent. The weights are changed according to the following equation:

Figure 7: An example of gradient descent.

To find the direction on the point P, along which the loss function f can decline, a derivative line of f along the point P is plotted. In this simple example, it gets obvious that the value of f decreases as the value of x decreases.(13) in which β is called the learning rate.

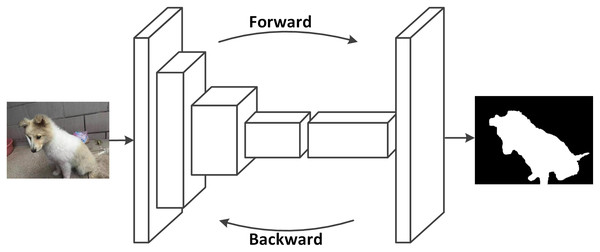

To sum up, in the forward propagation, the input data are propagated from the input layer to the output layer and the network provides the computing results (Fig. 8). In the backward propagation, the loss function is propagated from the output layer to the input layer, while the weights of each neuron is updated. One iteration of the training process ends when all weights of the network are updated. One complete training process usually involves numerous iterations during which the weights are updated systematically to move the network outputs closer to the expected ground truth.

Figure 8: Example of the training process for DNNs.

Convolutional neural networks

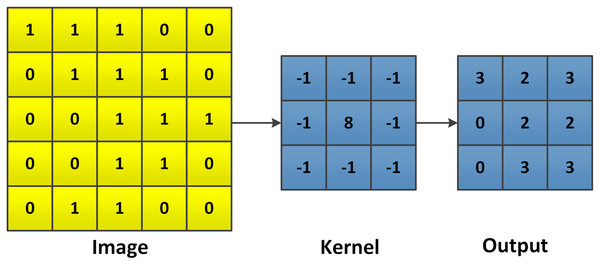

Convolutional neural networks (CNNs) (Krizhevsky, Sutskever & Hinton, 2012) are a special type of artificial neural networks (ANNs), which usually include more than one convolutional layer. CNNs are widely used in image processing (but also for example in language processing, however, they are far less popular there), since they have superior ability of information handling for a large amount of data. As the name describes, CNNs are based on convolution (shown in Figs. 9 and 10). The mathematical expression of a 2D convolution of an image is:

Figure 9: An example of a convolutional neural network applied for image classification.

The images are inputted to the network, propagated through several convolutional layers, pooling layers and one fully connected layer. Finally, the possibility of the input image representing a cat or a dog is outputted (photographer source credit: Yuan Jin).Figure 10: Example of a general 2D convolution.

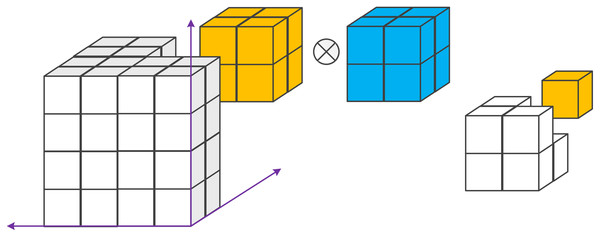

At first, the image is flipped for both, the rows and columns. Then the kernel slides over the flipped image, each element is multiplied by its corresponding pixel in the flipped image and summed up. The size of the output depends on both, the input image and the kernel.(14) in which k(i, j) is the input image, l is the convolution or filter kernel and g(i, j) is the convolved/filtered image. Equation (14) shows the basic form of a 2D convolution, which can also describe the similar calculation of a 3D convolution as shown in Fig. 11.

Figure 11: Example of a 3D convolution.

It applies a 3D filter to the dataset and the filter moves in 3-direction (x, y, z) to calculate the outputs. Both input and output data are a 3D volume, which is represented by cubes.Like traditional neural networks, a convolutional neural network consists of an input and an output layer, as well as several hidden layers which usually consist of convolutional layers in combination with other layer types, such as pooling layers and fully connected layers. The input images of CNNs are usually in the size of image height × image width × image depth. After the convolution, the images are abstracted to so-called feature maps and passed to the next layer. A convolutional layer has the following parameters:

The number of input and output channels;

Convolutional kernels defined by their shape.

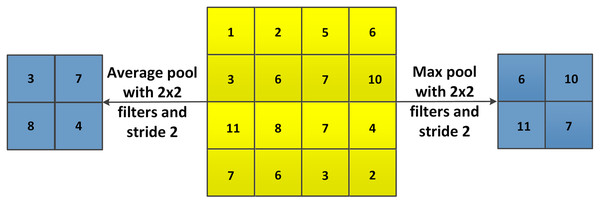

During the training process of CNNs, the features maps can become very large, which results in a big amount of computational resources required. One of the methods to solve this problem is the pooling layer. Pooling layers reduce the dimensions of feature maps from convolutional layers and pass the reduced data to the next layer. Different functions for pooling are available, such as max pooling, average pooling and sum pooling, where max pooling is most commonly used.

As shown in Fig. 12, with max pooling or average pooling operations, the 4 × 4 feature map is transformed to a 2 × 2 feature. The stride controls how the pooling filter is moved over the input volume.

Figure 12: Example of max pooling and average pooling.

At the end of a CNN, there are usually one or several fully connected layers, which connect every neuron in the last layer to every neuron in itself. The fully connected layers are used to concatenate the feature maps into the desired output values (e.g., using the same example as above, the probability of an image showing a cat or a dog).

Currently, CNNs have been widely used in the field of imaging processing. In the following section, a specific CNN model mainly for medical image segmentation will be introduced.

Fully convolutional neural networks

As one of the most common tasks in medical imaging, automatic segmentation is challenging because of the huge difference between different patients (anatomy and pathology). In this field, however, neural networks have shown great advantages to learn image features automatically from the medical images and corresponding ground truths (Hesamian et al., 2019). In addition, the development of fully convolutional neural networks (FCNs) [19] further improved the advantages of deep learning in the area of image segmentation and particularly semantic segmentation.

Semantic segmentation is to understand what is in the image on a pixel level, which can be also defined as to label each pixel of an image with a corresponding class. Figure 13 shows an example for a semantic segmentation. Fully convolutional neural networks were developed by Long, Shelhamer & Darrell, 2015 based on normal convolutional neural networks. In FCNs, the final fully connected layer of CNNs are replaced by convolutional layers, so that the images are not downsized and the output will not be a single label as in CNNs. Instead, a pixel-wise output can be calculated to represent each pixel in the input image. Figure 14 shows a typical setup of an FCN for a semantic segmentation. Based on the improvement of FCNs, there are currently many deep learning methods in computer visions that are applied for segmentation tasks in medical imaging. Most of these methods are based on FCNs that learn the features of spatial dimensions from the original images.

Figure 13: Example of a semantic segmentation.

The left image (A) is the original image and the right image (B) is the pixel-wise segmented image revealing a dog and its contour (white, photographer source credit: Yuan Jin).Figure 14: FCNs can learn to make dense predictions for pixel-wise predictions (photographer source credit: Yuan Jin).

U-Net

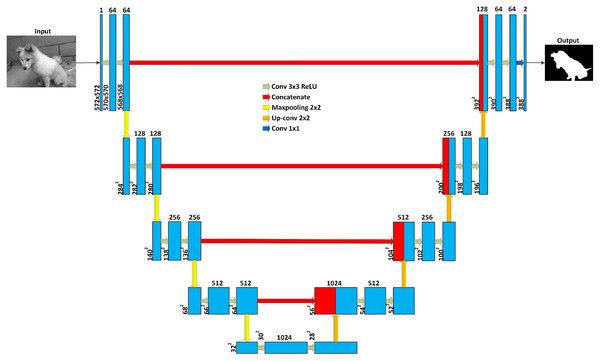

One of the most well known FCN models for medical image segmentation is the U-Net, proposed by Ronneberger, Fischer & Brox (2015), using the concept of deconvolution introduced in Zeiler & Fergus (2014). As shown in Fig. 15, this model has two main parts for downsampling and upsampling. The downsampling part is similar to the structure of a CNN, while the upsampling part, usually known as the expansion phase, is built of an upsampling layer followed by a deconvolution layer. The former enlarge the dimensions of the feature maps that are reduced in the downsampling layer, while the latter corresponds to the convolutional layers. One important idea of U-Net is the connection between layers in the downsampling and upsampling parts at the same ‘‘levels’’ These connections provide high-resolution features of spatial dimensions from the downsampling part to the upsampling part. It means that the model can be trained with multi-scale features from different layers. This structure of deep learning model is suitable for the automatic segmentation of large size images.

Figure 15: U-Net architecture.

Each blue box corresponds to a multi-channel feature map. The number of channels is denoted on top of the box. White boxes represent copied feature maps. The arrows represent different operations (photographer source credit: Yuan Jin).3D U-Net

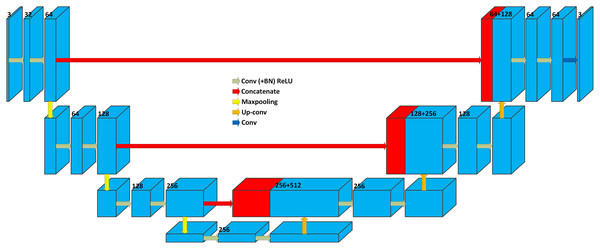

Based on the main idea of the U-Net, Çiçek et al. (2016) developed the 3D version of the U-Net model [23], which is able to learn from sparsely annotated volumetric images. The 3D U-Net is developed by replacing all 2D operations with their 3D counterparts. Figure 16 shows the architecture of the 3D U-Net, which is similar to the architecture of the original 2D U-Net (Fig. 15).

Figure 16: 3D U-Net architecture.

Blue boxes represent feature maps. The number of channels is denoted above each feature map.There are two kind of applications for this model: (1) When semi-automatically applied, a part of the original image is manually segmented. The network learns from these segmented parts and provides a dense 3D segmentation. (2) For a fully automatic application, a trained model is assumed to exist. The model is trained with existing data and segments new (unseen) volumetric images.

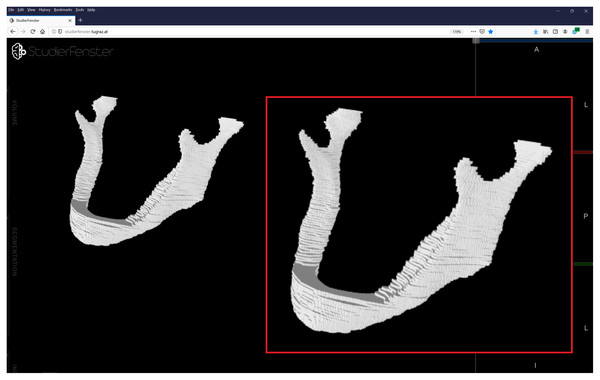

3D U-Nets are widely used in the field of medical imaging, as there are many types of medical imaging applications based on 3D data acquisitions. In general, 2D methods are usually not optimal for producing 3D image labels (segmentations), because of the missing information (and interpolations) between the single 2D slices. Manual expert segmentations (still considered the gold standard), however, are in general done on a slice-by-slice basis in 2D, because a 3D segmentation, handling several 2D slices simultaneously, is mentally often too demanding for the annotator (especially in larger structures, which need some considerable amount of time to be segmented). This often results in a step-like effect of the manual segmentations, because an overall smoothing in 3D is missing and hard to incorporate manually (Fig. 17).

Figure 17: Visualization of a manual segmentation of the lower jawbone.

Because the manual segmentation has been done slice-by-slice in 2D, the overall 3D segmentation shows a step-like effect between the single 2D slices. Data and manual segmentation taken from Wallner, Mischak & Egger (2019), visualization done with Studierfenster (www.studierfenster.at) (Wild, Weber & Egger, 2019).We focused in this section on the main computer vision architectures as an example, because computer vision is currently the most crowded and popular field (and three of the top five computer science conferences are related to computer vision, according to: https://www.guide2research.com/topconf/), especially when taking also the medical imaging field into account. Note that the surveys we present in this meta-survey cover over 11,000 references, which makes it impractical to introduce all deep learning-based concepts and architectures. However, for most surveys, we outline what kind of deep learning schemes or architectures have been reviewed and pretty much all surveys have a background section about these in their contributions. This enables the interested reader to dive deeper into the specific deep learning background by directly accessing the corresponding survey.

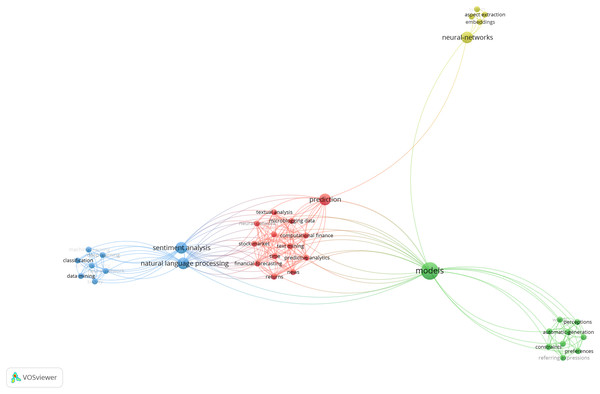

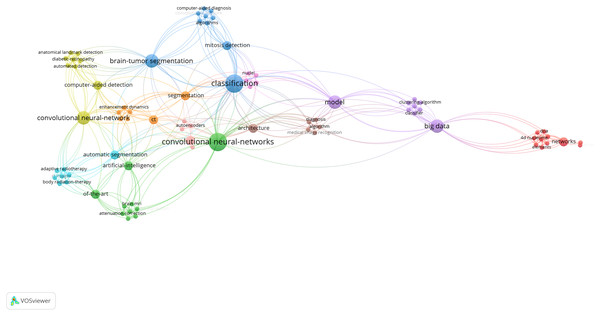

Deep learning: a survey summary of selected reviews across scientific disciplines

This section presents selected review and survey publications in deep learning. For a better overview, the publications are arranged in four categories:

computer vision,

language processing,

medical informatics,

and additional works.

The first category introduces deep learning reviews and surveys in the field of computer vision. Among others, this includes publications about object detection, image segmentation, object recognition and a survey about inpainting with generative adversarial networks. The second category presents deep learning review publications in (natural) language processing. This covers areas like language understanding but also language generation and answer selection. The third category presents deep learning reviews in the medical field, thus covering reviews about different aspects of medical image processing, medical imaging and computer-aided diagnosis. Ultimately, the last category of this section closes with additional deep learning reviews in areas like big data, networking, multimedia, agriculture and reviews that cover multiple scientific areas or applications. According to these sections and sub-sections, the tables of this manuscript are divided into the same categories and sub-categories, and present also the current citations for every publication according to Google Scholar (status as of mid-August 2020). Note that the review about data augmentation is listed in the first category about computer vision topics, because it mainly discusses approaches for images. Some review publications would fit in more than one main category. For example, reviews about medical image analysis, could also fit into the category computer vision. However, the final assignment and arrangement was made also under the consideration of balancing the number of publications per category.

The rationale behind choosing the four main categories are the underlying data sources and their different data generation process. Computer vision deals with image and video data, natural language processing deals with textual data, medical informatics with medical data and the last section covers surveys that deal with image and text (multimedia) data or span across different disciplines. Unfortunately, the boundaries between these categories are not always clear, e.g., medical informatics connects to both computer vision and language processing. Hence, there are various other possibilities to structure such a meta-survey (or surveys in general), for example, by data modalities (e.g. image vs. language), by domains, by used architectures, by time of publication, by impact, just to name a few. But all these arrangements have their advantages and disadvantages, and are not unique, except maybe an arrangement by time of publication or impact (e.g. citations, which also very likely changes over time). However, the underlying medical data is distinct to conventional image data in computer vision with its specific characteristics. In addition, the medical field requires further knowledge on top of the technical expertise (e.g., a medical image might require different pre-processing/deep learning than generic images). Moreover, deep learning had a massive impact in the medical field, as can be seen in the presented works and citations, which justifies for its own category (also, health is a fundamental right and probably the most important aspect in life). For illustration, a similar situation is also found for research on causality, where similar techniques are used across multiple fields, but the medical field established its own terminology (e.g., standardization vs. adjustment formula). Finally, the order of the main categories also arises from the data sources, starting with computer vision and language processing working with fundamental data like images, videos and text. Ultimately, the survey starts with computer vision, because it is currently a more active research field than language processing.

Note that we do not claim to provide a complete meta-survey of all existing surveys on deep learning, which would go far beyond the scope of this contribution. We see our meta-survey more as a sort of baseline or building block contribution for deep learning in the computer science community, where meta-surveys are, in general, still somewhat a rarity, in comparison to other fields, like the medical domain, where meta-surveys are quite common. In addition, we did not find a deep learning survey for every task at the time of publication. Hence, there are some important tasks missing in our meta-survey, e.g. machine translation in NLP. which should to be tackled by the research community in the near future. However, our survey tables can provide here a compact overview, which tasks have been covered by deep learning surveys so far and which need more attention by the community. Our intention is to present selected works across scientific disciplines, divided into categories according to the underlying data sources, to give an impression about the impact deep learning has on a very broad level.

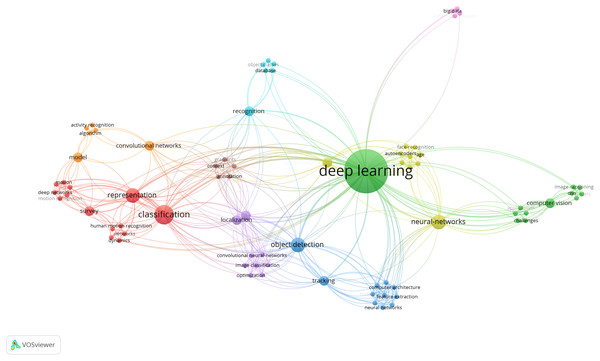

Deep learning reviews in computer vision

This sub-section deals with the deep learning reviews in the area of computer vision. It is divided into ten sub-categories and the number of references, and citations (according to Google Scholar and status as of mid-August 2020) for each of these categories is given in Table 2:

| Computer vision | Publications | Number of references | Citations (until August 2020) | Preprints |

|---|---|---|---|---|

| General computer vision | Voulodimos et al. (2018) | 114 | 550 | No |

| Guo et al. (2016) | 216 | 950 | No | |

| Object detection | Liu et al. (2020) | 332 | 269 | No |

| Zhao et al. (2019) | 230 | 491 | No | |

| Jiao et al. (2019) | 317 | 45 | No | |

| Image segmentation | Garcia-Garcia et al. (2018) | 126 | 127 | No |

| Minaee et al. (2020) | 172 | 24 | Yes | |

| Face recognition | Masi et al. (2018) | 81 | 220 | No |

| Li & Deng (2020) | 253 | 189 | No | |

| Wang & Deng (2018) | 305 | 11 | Yes | |

| Action/motion recognition | Herath, Harandi & Porikli (2017) | 161 | 339 | No |

| Wang et al. (2018b) | 182 | 122 | No | |

| Biometric recognition | Sundararajan & Woodard (2018) | 176 | 66 | No |

| Minaee et al. (2019) | 282 | 8 | Yes | |

| Image super-resolution | Wang, Chen & Hoi (2020) | 214 | 74 | No |

| Image captioning | Hossain et al. (2019) | 161 | 118 | No |

| Data augmentation | Shorten & Khoshgoftaar (2019) | 140 | 274 | No |

| Generative adversarial networks | Wang, She & Ward (2019) | 162 | 46 | Yes |

| Sum | – | 3,624 | 3,923 | – |

general computer vision,

object detection,

image segmentation,

face recognition,

action/motion recognition,

biometric recognition,

image super-resolution,

image captioning,

data augmentation,

and generative adversarial networks.

The commonality in the field of computer vision is to process and get some kind of understanding from digital images or videos. This can be, for example, the detection or segmentation (outlining) of people, animals or objects in images or videos. Computer vision algorithms lead to numerous real world applications, like automatic face or licence plate detection and recognition, or even self-driving cars, just to name a few. However, making the algorithms reliable enough for their specific task remains challenging. A missed person on a group photo for automatic tagging on a social network website may not be very dramatic, but a missed pedestrian by a self-driving car can have fatal consequences. Especially the fact that every image or video is slightly different makes it very hard for algorithms to generalize and there is no guarantee that new (exceptional) cases will not be missed or wrongly analysed/classified by an algorithm. To measure the performance of computer vision algorithms, common metrics like the Dice Similarity Coefficient (Sampat et al., 2006) or the Hausdorff distance (Huttenlocher, Klanderman & Rucklidge, 1993), e.g. for segmentation tasks, are used in the community to present their results. However, if research groups work on own data collections, comparison to other works are difficult and the presented metrics can be seen more like a proof of concept or common trend towards a solution for a task. A step towards a more objective evaluation is competing on common databases, like ImageNet, but also this course of action cannot replace real-life scenarios. The key challenge for all computer vision algorithms is the transition to real world applications, that work reliable in practice also for new, unseen data without major failures.

Note that data augmentation and generative adversarial networks are actually universal techniques that can also be used in other areas than computer vision, like language processing. However, the two surveys we chose for our contribution review only works for images and computer vision, hence, we present them in this first section.

General computer vision

A review about selected deep learning methods that have been used in the general area of computer vision is presented by Voulodimos et al. (2018). They introduce convolutional neural networks, deep Boltzmann machines, deep belief networks and stacked denoising autoencoders, alongside with their history and application tasks they have been used for. They conclude their review with an outlook on how future deep learning-based methods can be designed for tasks in computer vision and the challenges that arise in doing so.

Another general review on deep learning in computer vision comes from Guo et al. (2016). They also start their review with a comprehensive overview of different deep learning architectures, like CNNs, covering neural network layer types, like convolutional layers and pooling layers, but also training strategies, like dropout, DropConnect and pre-training/fine-tuning. For the deep learning-based architectures, they discuss characteristics, advantages and disadvantages. They conclude their review with current trends and challenges, and provide future directions for a theoretical understanding, human-level vision, training with limited data, time complexity and more powerful models in deep learning.

Object detection

Object detection is one of the most basic, but as well challenging problems in computer vision. Object detection deals with the localization of objects from predefined categories, like cats, dogs, etc., in natural images. Liu et al. (2020) outline more than 300 research contributions in their survey about object detection. In doing so, they cover many general aspects in the field of object detection, which includes, for example, detection frameworks, but also object feature representation and object proposal generation. On top, they address context modelling, training strategies, and, eventually, evaluation metrics for object detection.

A second review paper in the area of computer vision and object detection is from Zhao et al. (2019), which first provides a short introduction on convolutional neural networks, deep learning, and their history. They focus on typical generic object detection architectures and briefly survey various particular tasks. These cover salient object detection, but also pedestrian and face detection. In addition, an experimental analysis is provided, which allows the comparison of different approaches and hence, draw constructive conclusions amongst them.

Finally, Jiao et al. (2019) review existing approaches of general detection models and additionally introduce a common benchmark dataset for them. The authors also outline a comprehensive and systematic overview of numerous approaches for object detection, which include one-stage and multi-stage object detectors. Furthermore, they list and analyse established, as well as new, applications of object detection and its most representative branches.

Image segmentation

Image segmentation is usually the first step in different computer vision applications, like scene understanding, video surveillance, and robotic perception. Further applications can be medical image analysis, augmented reality and image compression, to name a few. Garcia-Garcia et al. (2018) provide a survey of deep learning approaches for semantic segmentation that can be translated and applied to numerous areas. In doing so, datasets, but also challenges, are outlined to guide researchers in the decision which method is most suitable for their needs and aims. Subsequently, the existing approaches and methods, like CNNs, are surveyed in the contribution. Additionally, they review common loss functions and error metrics and provide quantitative results for the introduced methods, but also the datasets that have been used for an evaluation.

Minaee et al. (2020) present a comprehensive review that covers a wide spectrum of contributions in the area of semantic and instance-level segmentation. This includes fully convolutional pixel-labelling networks and encoder-decoder architectures. Moreover, recurrent networks, multi-scale and pyramid-based methods. Further, visual attention and generative models in an adversarial setting. They studied the strengths and challenges of the proposed deep learning models, but also their similarity. Finally, the authors investigated the most commonly applied datasets and present performance results for them.

Face recognition

Face recognition is a significant biometric method for identity authentication that has been applied to numerous application areas. This includes public security, daily life, military, but also finance. Masi et al. (2018) introduce the main benefits of face recognition with deep learning, also called deep face recognition. They focus on identification and verification by learning representations of the face. The review gives a structured overview of works from the past years, covering the principals and state-of-the-art in face recognition methods.

Li & Deng (2020) provide a survey on facial expression recognition with deep learning, including datasets and algorithms. They present datasets that are available and have been commonly applied in previous works. Further, they outline commonly recognised data selection and evaluation concepts that have been used for these datasets. Next, they outline the general pipeline and workflow for a deep facial expression recognition approach, covering the corresponding background knowledge, and finally propose, for each stage, a feasible implementation.

Wang & Deng (2018) also provide a survey of the latest trends on deep facial expression detection, including the design of algorithms, but also possible applications, protocols and databases. They outline various network architectures and loss functions that have been introduced in the general field of the deep facial expression. They categorized the face processing approaches into two different classes: “one-to-many augmentation” and “many-to-one normalization”. They also give a summarized overview of common databases and compare them concerning model training and model evaluation. Finally, they explored further scenarios for deep facial expression, like cross-factor, heterogeneous, industrial and multiple-media.

Action and motion recognition

Understanding human actions and motions in visual data, like surveillance videos, is closely connected to research fields like object recognition, semantic segmentation, human dynamics and domain adaptation. Herath, Harandi & Porikli (2017) review notable steps that have been taken towards recognizing human actions. Therefore, they start with a discussion of first approaches that applied handcrafted representations. Subsequently, they review deep learning-based approaches suggested in this field.

Wang et al. (2018b) give an outline of latest trends and improvements of motion recognition in RGB-D images. They categorized the surveyed approaches into four groups. The groups are based on the particular modality used for recognition, and can be RGB-, skeleton, depth-, or RGB+D-based. Finally, they discuss the advantages and limitations, with a focus on approaches that encode spatial-temporal-structural information, which is inherent in video sequences.

Biometric recognition

Biometric recognition, or biometrics, studies the identification of people utilizing their unique phenotypical characteristics, like fingerprints or the iris, for applications ranging from cell phone authentication to airport security systems. Sundararajan & Woodard (2018) review one hundred distinct methods that study recognizing individuals with deep learning applying different biometric modalities. They conclude that the majority of research in biometrics based on deep learning has been conducted around face recognition and speaker recognition so far.

Minaee et al. (2019) conduct a survey of more than 120 works on biometric recognition, including face, fingerprint, iris, palm print, ear, voice, signature, and gait recognition. For each biometric recognition task, they present the available datasets used in the literature and their characteristics and outline the performance on popular public benchmarks.

Image super-resolution

Image super-resolution is a basic task in image processing that has seen a rise in popularity with the advent of deep learning. Image super-resolution methods and algorithms are used to improve the resolution of (low-resolution) images and videos. Wang, Chen & Hoi (2020) give a comprehensive overview on latest trends and advances in the field of image super-resolution focusing on deep learning methods. They divide the existing papers on image super-resolution methods into three main categories, namely supervised image super-resolution, unsupervised image super-resolution and finally, domain-specific image super-resolution. Moreover, they cover other topics in the field of image super-resolution, like public accessible benchmark data collections and metrics for a performance evaluation.

Image captioning

Image captioning refers to the generation of a description for an image. Therefore, their primary objects, but also their attributes and their relationships to each other within the image need to be recognized. Furthermore, image captioning must produce sentences that are syntactically and semantically correct. Hossain et al. (2019) introduce a broad survey of works for image captioning based on deep learning. They analyse their main strengths, performances, but also their limitations. In addition, they explore the datasets and the evaluation metrics that have been used for automatic image captioning with deep learning.

Data augmentation

Data augmentation can be used for the expansion of (limited) datasets to obtain larger training and evaluation sets. Shorten & Khoshgoftaar (2019) review image augmentation algorithms that cover geometric transformations, but also colour space augmentations and feature space augmentation. Further, techniques like kernel filters, mixing images, random erasing, neural style transfer and meta-learning. They also cover generative adversarial network-based augmentation methods. In addition, they explore and study further characteristics in the area of data augmentation, like test-time augmentation, final size of the dataset, the impact of the resolution, but also curriculum learning. Finally, they give an overview of available approaches for meta-level decisions for implementing data augmentation.

Generative adversarial networks

Generative adversarial networks or GANs belong to the field of generative models in machine learning. GANs have experienced an in-depth exploration during the last few years with the most significant impact in the field of computer vision. Wang, She & Ward (2019) survey three real-world problems that have been approached with GANs: the generation of high-quality images, diversity of image generation, and stable training. They give a detailed overview of the current state-of-the-art in generative adversarial networks. Furthermore, they structure their review using a specific taxonomy, which they have adopted based on variations in generative adversarial network-based architectures and loss functions.

Going deeper: common architectures, methods, evaluations, pros, cons, challenges and future directions in computer vision

Table 3 gives more details about the presented methods, pros, cons, evaluations and challenges and future directions of the surveys in the category computer vision. The two surveys about general computer vision from Voulodimos et al. (2018) and Guo et al. (2016) introduce mainly CNNs, Boltzmann and autoencoder architectures. The authors list several pros and cons, regarding which networks can learn features automatically, generalize well, are computationally demanding during training, can be trained in real-time and do not perform well on small training sets. A challenge is, that the underlying theory of the models is not well understood, which leads to the problem of selecting an optimal or effective architecture or algorithm for a given task and that there is no clear understanding of what kind of architectures should perform better than other ones. Further challenges and future directions are the training with limited data, reducing the time complexity, the development of more powerful models and a better understanding in evolving and optimizing CNN architectures.

| General computer vision | |

|---|---|

| Voulodimos et al. (2018) | |

| Architectures/Methods | CNN, Boltzmann (DBN and DBM), SdA |

| Pros/Evaluations | Automatic feature learning (CNN), invariant to transformations (CNN); can work in an unsupervised fashion (DBN, DBM, SdA); can be trained in real time (SdA) |

| Cons/Evaluations | Needs labelled data (CNN); computationally demanding training (CNN, DBN, DBM) |

| Challenges and future directions | Optimal selection of model type and structure for a given task; why specific architecture or algorithm is effective in a given task or not |

| Guo et al. (2016) | |

| Architectures/Methods | CNN, RBM, AutoEncoder, Sparse coding |

| Pros/Evaluations | Generalization (CNN, RBM, AutoEncoder, Sparse coding); Unsupervised learning (RBM, AutoEncoder, Sparse coding); Feature learning (CNN, RBM, AutoEncoder); Real-time training (CNN, RBM); Real-time prediction (CNN, RBM, AutoEncoder, Sparse coding); Biological understanding (Sparse coding); Theoretical justification (CNN, RBM, AutoEncoder, Sparse coding); Invariance (CNN, Sparse coding) |

| Cons/Evaluations | Unsupervised learning (CNN); Feature learning (Sparse coding); Real-time training (AutoEncoder, Sparse coding); Biological understanding (CNN, RBM, AutoEncoder); Invariance (RBM, AutoEncoder); Small training set (CNN, RBM, AutoEncoder, Sparse coding) |

| Challenges and future directions | Underlying theory is not well understood; no clear understanding of which architectures should perform better than others; training with limited data; time complexity; more powerful models; better understanding in evolving and optimizing the CNN architectures |

| Object detection | |

| Liu et al. (2020) | |

| Architectures/Methods | Region Based (Two Stage) Frameworks (RCNN, SPPNet, Fast RCNN, Faster RCNN, RPN, RFCN, Mask RCNN, Chained Cascade Network and Cascade RCNN, Light Head RCNN), Unified (One Stage) Frameworks |

| Pros/Evaluations | Improved detection speed and quality (Fast RCNN); end-to-end detector training (Fast RCNN); efficient and accurate generating region proposals (Faster RCN); efficient region proposal computation (RPN); fully convolutional over the entire image (RFCN); pixelwise object instance segmentation (Mask RCNN); simple to training (Mask RCNN); end-to-end learning of more than two cascaded classifiers (Chained Cascade Network and Cascade RCNN); reduce the RoI computation (Light Head RCNN); single-stage object detectors based on fully convolutional deep networks (OverFeat); uses features from an entire image globally (YOLO); real time detection (YOLOv2); faster than YOLO (SSD); retaining high detection quality (SSD); outperforming all previous one stage detectors (CornerNet) |

| Cons/Evaluations | Slow and hard to optimize (RCNN); expensive in disk space and time (RCNN); Testing is slow (RCNN); slow (DetectorNet); less accurate than RCNN (OverFeat); makes localization errors (YOLO); fail to localize small objects (YOLO); slower than SSD (CornerNet) |

| Challenges and future directions | Open world Learning; better and more efficient detection frameworks; compact and efficient CNN features; automatic neural architecture search; object instance segmentation; weakly supervised detection; few/zero shot object detection; object detection in other modalities; universal object detection; the research field of generic object detection is still far from complete |

| Zhao et al. (2019) | |

| Architectures/Methods | Region Proposal-Based Framework (R-CNN, SPP-Net, Fast R-CNN, Faster R-CNN, R-FCN, FPN, Mask R-CNN, Multitask Learning, Multiscale Representation, and Contextual Modeling), Regression/Classification-Based Framework (Pioneer Works, YOLO, SSD) |

| Pros/Evaluations | Hierarchical feature representation, exponentially increased expressive capability, jointly optimize several related tasks together, large learning capacity (CNN); mid-level representations (SPP-Net); improve the quality of candidate BBs, extract high-level features (R-CNN); grouping and saliency cues to provide more accurate candidate boxes of arbitrary sizes quickly and to reduce the searching space in object detection (Region Proposal Generation); high-level, semantic, and robust feature representation for each region proposal can be obtained (CNN-Based Deep Feature Extraction); single stage training (Fast R-CNN); saves storage space (Fast R-CNN); end-to-end training (Faster R-CNN); object detection in a fully convolutional architecture (R-FCN); extract rich semantics from all levels and be trained end to end with all scales (FPN); predict segmentation masks in a pixel-to-pixel manner (Mask R-CNN); representation requires fewer parameters (Mask R-CNN); flexible and efficient framework for instance-level recognition (Mask R-CNN) |

| Cons/Evaluations | Fixed size input image (CNN); Training is a multistage pipeline (R-CNN); training is expensive in space and time (CNN); redundant obtained region proposals (CNN); fixed-size input (SPP-Net); additional expense on storage space (SPP-Net); alternate training algorithm is very time-consuming (Faster R-CNN); training time and memory consumption increase rapidly (FPN); struggles in small-size object detection and localization (Mask R-CNN) |

| Challenges and future directions | Multitask joint optimization and multimodal information fusion; scale adaption; spatial correlations and contextual modeling; cascade network; unsupervised and weakly supervised learning; network optimization; 3D object detection; video object detection |

| Jiao et al. (2019) | |

| Architectures/Methods | Two-Stage Detectors (R-CNN, Fast R-CNN, Faster R-CNN, Mask R-CNN), One-Stage Detectors (YOLO, YOLOv2, YOLOv3, SSD, SSD, RetinaNet, M2Det), Latest Detectors (Relation Networks for Object Detection, DCNv2, NAS-FPN) |

| Pros/Evaluations | One-stage end-to-end training (Fast R-CNN); efficiently predict region proposals (Faster R-CNN); accurate object detector (Mask R-CNN); real-time detection (YOLO); improved speed and precision (YOLOv2); multi-label classification (YOLOv3); detects small objects (YOLOv3); single-shot detector for multiple categories within one-stage (SSD); adds prediction module and deconvolution module (DSSD); train unbalanced positive and negative examples (RetinaNet); effective feature pyramids (M2Det); an end-to-end training (RefineDet); better predict hard detected objects (RefineDet); considers the interaction between different targets in an image (Relation Networks for Object Detection); utilizes more deformable convolutional layers (DCNv2); deformable layers are modulated by a learnable scalar (DCNv2); top-down and bottom-up connections to fuse features (NAS-FPN) |

| Cons/Evaluations | Input vectors of fixed length (CNN); no shared computation (R-CNN); worse performance on medium and larger sized objects (YOLOv3); slower than RetinaNet800 (M2Det) |

| Challenges and future directions | Combining one-stage and two-stage detectors; video object detection; efficient post-processing methods; weakly supervised object detection methods; multi-domain object detection; 3D object detection; salient object detection; unsupervised object detection; multi-task learning; multi-source information assistance; constructing terminal object detection system; medical imaging and diagnosis; advanced medical biometrics; remote sensing airborne and real-time detection; GAN-based detector |

| Image segmentation | |

| Garcia-Garcia et al. (2018) | |

| Architectures/Methods | Variants of CNN, AlexNet, VGG-16, GoogLeNet, ResNet, ReNet, and custom architectures |

| Pros/Evaluations | Relatively simple (AlexNet); less parameters and easy to train (VGG); reduced numbers of parameters and operations (GoogLeNet); addresses problem of training deep networks (ResNet); overcoming vanishing gradients (ResNet); Accuracy, Efficiency, Training, Instance Sequences, Multi-modal, 3D |

| Cons/Evaluations | Complex (GoogLeNet); depth (ResNet); Accuracy, Efficiency, Training, Instance Sequences, Multi-modal, 3D |

| Challenges and future directions | Evaluation metrics; execution time; memory footprint; accuracy; reproducibility; 3D datasets; sequence datasets; point cloud segmentation; context knowledge; real-time segmentation; temporal coherency on sequences; multi-view integration |

| Minaee et al. (2020) | |

| Architectures/Methods | Fully convolutional networks; Convolutional models with graphical models; Encoder-decoder based models; Multi-scale and pyramid network based models; R-CNN based models (for instance segmentation); Dilated convolutional models and DeepLab family; Recurrent neural network based models; Attention-based models; Generative models and adversarial training; Convolutional models with active contour models; Other models |

| Pros/Evaluations | Quantitative accuracy, speed (inference time), and storage requirements (memory footprint) |

| Cons/Evaluations | Quantitative accuracy, speed (inference time), and storage requirements (memory footprint) |

| Challenges and future directions | More challenging datasets; interpretable deep models; weakly-supervised and unsupervised learning; real-time models for various applications; memory efficient models; 3D point cloud segmentation; application scenarios |

| Face recognition | |

| Masi et al. (2018) | |

| Architectures/Methods | DCNN, Deep-Face, VGG16, ResNet-50, FacePoseNet, STN, DREAM |

| Pros/Evaluations | Simplify classification (DREAM); reduce intra-class variance (CenterLoss); reduce the within-class variability (L2-constrained SoftMax); huge number of subjects (Hierarchical SoftMax); training very large pool of subjects (deep metric learning); learning deep embeddings (margin-based contrastive loss) |

| Cons/Evaluations | Handling pose variations (DCNN); does not explicitly minimize the intra-class variation of each subject (SoftMax layer based on cross-entropy); unclear parameter selection (L2-constrained SoftMax); increased complexity (deep metric learning losses) |

| Challenges and future directions | Automatically generated template for unknown individuals; video-based face recognition; multi-target tracking and recognition; automatic self-organization of a large corpus of unlabeled faces; adaptation and model tuning; biases in large datasets |

| Li & Deng (2020) | |

| Architectures/Methods | CNN, RBM, DBN, GAN, face alignment detectors (holistic, part-based, cascaded regression, deep learning), deep FER networks for static images, deep FER networks for dynamic image sequence, AlexNet, VGG, VGG-face, GoogleNet, frame aggregation, expression intensity, RNN, C3D, FLT, CN, NE, others |

| Pros/Evaluations | Real-time, speed, performance (holistic, part-based, cascaded regression, deep learning); network size, preprocessing, data selection, additional classifier, performance (CNN, RBM, DBN, GAN); data size, spatial and temporal information, frame length, accuracy, efficiency (frame aggregation, expression intensity, RNN, C3D, FLT, CN, NE) |

| Cons/Evaluations | Real-time, speed, performance (holistic, part-based, cascaded regression, deep learning); network size, preprocessing, data selection, additional classifier, performance (CNN, RBM, DBN, GAN); data size, spatial and temporal information, frame length, accuracy, efficiency (frame aggregation, expression intensity, RNN, C3D, FLT, CN, NE) |

| Challenges and future directions | Facial expression dataset (illumination variation, occlusions, non frontal head poses, identity bias and the recognition of low-intensity expression, age, gender and ethnicity, employ crowd-sourcing models, fully automatic labeling tool); dataset bias and imbalanced distribution (generalization on unseen test data, performance in cross-dataset settings, imbalanced class distribution); Incorporating other affective model (capture the full repertoire of expressive behaviors, different facial muscle action parts, dealing with continuous data, learn expression-discriminative representations); multimodal affect recognition (multimodal sentiment analysis, processing these diverse modalities, multi-sensor data fusion methods, intra-modality and inter-modality dynamics, infrared images, depth information from 3D face models, physiological data) |

| Wang & Deng (2018) | |

| Architectures/Methods | Backbone network (AlexNet, VGGNet, GoogleNet, ResNet, SENet, light-weight architectures, adaptive architectures, joint alignment-recognition architectures), assembled networks (multipose, multipatch, multitask), DeepFace, DeepID2, DeepID3, FaceNet, Baidu, VGGface, light-CNN, loss related |

| Pros/Evaluations | Number of networks, training set, accuracy (DeepFace, DeepID2, DeepID3, FaceNet, Baidu, VGGface, light-CNN, loss related) |

| Cons/Evaluations | Number of networks, training set, accuracy (DeepFace, DeepID2, DeepID3, FaceNet, Baidu, VGGface, light-CNN, loss related) |

| Challenges and future directions | Security issues; privacy-preserving face recognition; understanding deep face recognition; remaining challenges defined by non-saturated benchmark datasets; ubiquitous face recognition across applications and scenes; pursuit of extreme accuracy and efficiency; Fusion issues |

| Action/motion recognition | |

| Herath, Harandi & Porikli (2017) | |

| Architectures/Methods | Spatiotemporal networks, multiple stream networks, deep generative networks, temporal coherency networks, VGG, Decaf, RNN, LSTM, LRCN, Dynencoder, autoencoder, adversarial models, Siamese Networks, others |

| Pros/Evaluations | Accuracy on seven challenging action datasets (CNN, ClarifaiNet, GoogLeNet, VGG, others) |

| Cons/Evaluations | Accuracy on seven challenging action datasets (CNN, ClarifaiNet, GoogLeNet, VGG, others) |

| Challenges and future directions | Training video data; knowledge transfer; heterogeneous domain adaptation; boost the performance; generic form of deep architectures for spatiotemporal learning; carefully engineered approaches; data augmentation techniques; foveated architecture; distinct frame sampling strategies; more realistic activities; real-life scenarios; deeper understanding in action recognition |

| Wang et al. (2018b) | |

| Architectures/Methods | CNN, LSTM, RNN, Autoencoder, DDNN, IDMM, others |

| Pros/Evaluations | Accuracy, Jaccard Index, cross-subject setting, cross-view setting (CNN, LSTM, RNN, Autoencoder, DDNN, IDMM, others) |

| Cons/Evaluations | Accuracy, Jaccard Index, cross-subject setting, cross-view setting (CNN, LSTM, RNN, Autoencoder, DDNN, IDMM, others) |

| Challenges and future directions | Better results on large complex dataset; practical intelligent recognition systems; encoding temporal information; Small training data; viewpoint variation and occlusion; execution rate variation and repetition; cross-datasets; online motion recognition; action prediction; hybrid networks; simultaneous exploitation of spatial-temporal-structural information; fusion of multiple modalities; large-scale datasets; zero/one-shot learning; outdoor practical scenarios; unsupervised learning/self-learning; online motion recognition and prediction |

| Biometric recognition | |

| Sundararajan & Woodard (2018) | |

| Architectures/Methods | Deep Boltzmann Machines, Restricted Boltzmann Machines, Deep Belief Networks, Autoencoders, CNN, RNN |

| Pros/Evaluations | (recognition) accuracy, Equal Error Rate, Mean Absolute Error, False Alarm Rate, False Rejection Rate (CNN, DNN, DBN, RNN, others) |

| Cons/Evaluations | (recognition) accuracy, Equal Error Rate, Mean Absolute Error, False Alarm Rate, False Rejection Rate (CNN, DNN, DBN, RNN, others) |

| Challenges and future directions | Real-world applicability; beyond face and voice recognition; scaling up in terms of identification; large-scale datasets; dataset quality; computing resources; training speed-up; large-scale identification; behavioral biometrics; robust to data noise; modeling biometric aging; biometric segmentation; fusion of multiple modalities |

| Minaee et al. (2019) | |

| Architectures/Methods | CNN, AlexNet, VGGNet, GoogleNet, ResNet, SphereFace, FingerNet, SCNN, RSM, variants |

| Pros/Evaluations | Equal Error Rate, accuracy (Rank1 identification, verification accuracy), performance (accuracy, Equal Error Rate, R1-ACC) |

| Cons/Evaluations | Equal Error Rate, accuracy (Rank1 identification, verification accuracy), performance (accuracy, Equal Error Rate, R1-ACC) |

| Challenges and future directions | More challenging datasets; interpretable deep models; few shot learning, and self-supervised learning; biometric fusion; real-time models for various applications; memory efficient models; security and privacy issues |

| Image super-resolution | |

| Wang, Chen & Hoi (2020) | |

| Architectures/Methods | SRCNN, DRCN, FSRCNN, ESPCN, LapSRN, DRRN, SRResNe, SRGAN, EDSR, EnhanceNet, MemNet, SRDenseNet, DBPN, DSRN, RDN, CARN, MSRN, RCAN, ESRGAN, RNAN, Meta-RDN, SAN, SRFBN |

| Pros/Evaluations | Performance, PSNR (FSRCNN, LapSR, SRCNN, CARN-M, FALSR-B, FALSR-C, BTSRN, CARN, FALSR-A, OISR-RK2-s, OISR-LF-s, VDSR, MemNet, MSRN, OISR-RK2, MDSR, DBPN, RDN, SAN, RCAN, DRRN, DRCN, EDSR, OISR-RK3) |

| Cons/Evaluations | Performance, PSNR (FSRCNN, LapSR, SRCNN, CARN-M, FALSR-B, FALSR-C, BTSRN, CARN, FALSR-A, OISR-RK2-s, OISR-LF-s, VDSR, MemNet, MSRN, OISR-RK2, MDSR, DBPN, RDN, SAN, RCAN, DRRN, DRCN, EDSR, OISR-RK3) |

| Challenges and future directions | Combining local and global information; combining low- and high-level information; context-specific attention; more efficient architectures; upsampling methods; learning strategies; more accurate metrics; blind IQA methods; unsupervised super-resolution; towards real-world scenarios; dealing with various degradation; domain-specific applications |

| Image captioning | |

| Hossain et al. (2019) | |

| Architectures/Methods | Image encoder (AlexNet, VGGNet, GoogLeNet, ResNet, Inception-V3), Language model (LBL, LSTM, SC-NLM, RNN, DTR, MELM, Language CNN) |

| Pros/Evaluations | BLEU, PPLX, R@K, mrank, METEOR, CIDEr, PPLX, AP, IoU, PPL, Human Evaluation, E-NGAN, E-GAN, SPICE, SPIDEr |

| Cons/Evaluations | BLEU, PPLX, R@K, mrank, METEOR, CIDEr, PPLX, AP, IoU, PPL, Human Evaluation, E-NGAN, E-GAN, SPICE, SPIDEr |

| Challenges and future directions | Detect prominent objects and attributes and their relationships; generating accurate and multiple captions; open-domain dataset; generate high-quality captions; adding external knowledge; generate attractive image captions; supervised learning needs a large amount of labeled training data; focus on unsupervised learning and reinforcement learning |

| Data augmentation | |

| Shorten & Khoshgoftaar (2019) | |

| Architectures/Methods | Deep Learning-based (adversarial training, neural style transfer, GAN data augmentation), CNN, LeNet-5, AlexNet, GAN, NAS, DCGAN, CycleGAN, Progressively-Growing GAN, WGAN, variants |

| Pros/Evaluations | C10, C10+, C100, C100+, SVHN (ResNetl8, ResNet18, WideResNet, Shake-shake regularization); accuracy (original testing data, FGSM, PGD); Visual Turing Test (DCGAN, WGAN); validation accuracy (None, Traditional, GAN, Neural, Neural + loss, Control); AutoAugmen, ARS (Wide-ResNet, Shake-Shake, AmoebaNet, PyramidNet); Rank, score, class (Deep Image) |

| Cons/Evaluations | C10, C10+, C100, C100+, SVHN (ResNetl8, ResNet18, WideResNet, Shake-shake regularization); accuracy (original testing data, FGSM, PGD); Visual Turing Test (DCGAN, WGAN); validation accuracy (None, Traditional, GAN, Neural, Neural + loss, Control); AutoAugmen, ARS (Wide-ResNet, Shake-Shake, AmoebaNet, PyramidNet); Rank, score, class (Deep Image) |

| Challenges and future directions | Establishing a taxonomy of augmentation techniques; improving the quality of GAN samples; combine meta-learning and data augmentation; explore relationships between data augmentation and classifier architecture; extending data augmentation principles to other data types; impact on video data; translation to text, bioinformatics, tabular records; establish benchmarks for different levels of limited data; super-resolution networks; test-time augmentation; meta-learning GAN architectures; practical integration of data augmentation into deep learning software tools; common data augmentation APIs |

| Generative adversarial networks | |

| Wang, She & Ward (2019) | |

| Architectures/Methods | FCGAN, SGAN, BiGAN, CGAN, InfoGAN, AC-GAN, LAPGAN, DCGAN, BEGAN, PROGAN, SAGAN, BigGAN, rGANs, YLG, AutoGAN, MSG-GAN, Loss-Variant GANs, WGAN, WGAN-GP, LSGAN, f-GAN, UGAN, LS-GAN, MRGAN, Geometric GAN, RGAN, SN-GAN, RealnessGAN, Sphere GAN, SS-GAN, variants |

| Pros/Evaluations | Fast sample generation, handles sharp probability distribution (FCGAN); mode diversity, stabilizes training (MRGAN); unified framework (f-GAN); solves vanishing gradient, image quality, solves mode collapse (WGAN); converges fast, stable model training, complex functions (WGAN-GP); vanishing gradient, stabilized training, mode diversity, easy implementation (LSGAN); vanishing gradient, mode collapse (LS-GAN); mode collapse, stable training, converges to Nash equilibrium (Geometric GAN); mode collapse, high order gradient information, training stability (Unrolled GAN); vanishing gradient, unified framework (IPM GANs), mode collapse (RGAN); computationally light, easy implementation, image quality, mode collapse, stable training, vanishing gradient (SN-GAN); stable training, accurate results, no additional constraints (Sphere GAN); self-supervision, competitive results (SS-GAN); discriminator distribution as a measure of realness, image quality (RealnessGAN); time (DCGAN, BEGAN, PROGAN, RFACE); accuracy (DCGAN, BEGAN, PROGAN); score (DCGAN, BEGAN, PROGAN); performance (FCGAN, BEGAN, PROGAN, LSGAN, DCGAN, WGAN-GP, SN-GAN, Geometric GAN, RGAN, AC-GAN, BigGAN, RealnessGAN, MSG-GAN, SS-GAN, YLG, Sphere GAN) |

| Cons/Evaluations | Vanishing gradient for G, mode collapse, low image resolution (FCGAN); low image resolution, vanishing gradient for G, limited testing (MRGAN); stability (f-GAN); convergence time, vanishing gradient, complex learning hard to converge (WGAN); no batch normalization (WGAN-GP); image quality (LSGAN); difficult implementation, image quality (LS-GAN); vanishing gradient, limited testing (Geometric GAN); image quaility (Unrolled GAN); added relativism, performance comparison (RGAN); limited testing (SN-GAN); limited investigation (Sphere GAN); self-supervised architecture (SS-GAN); model diversity (RealnessGAN); time (DCGAN, BEGAN, PROGAN, RFACE); accuracy (DCGAN, BEGAN, PROGAN); score (DCGAN, BEGAN, PROGAN); performance (FCGAN, BEGAN, PROGAN, LSGAN, DCGAN, WGAN-GP, SN-GAN, Geometric GAN, RGAN, AC-GAN, BigGAN, RealnessGAN, MSG-GAN, SS-GAN, YLG, Sphere GAN) |

| Challenges and future directions | Limited GAN research in other non-computer vision areas; GANs are hard to apply to the natural language application field; generating comments to live streaming (NLP); significant impact on neuroscience by tackling privacy issues; limited exploration of time-series data generation; lack of efficient evaluation metrics in some areas; society, safety concerns, e.g. generation of tampered videos; detector for AI-generated images; GPU memory problems for large batched images; loss functions important for stable training; video application is still limited |

The three surveys about object detection with deep learning (Liu et al., 2020; Zhao et al., 2019; Jiao et al., 2019), all outline the main object detectors in deep learning, like R-CNN, Fast R-CNN, Faster R-CNN, Mask R-CNN, etc. in a temporal sequence. In doing so, showing the improvement from one detector to the other over time, which are for example a more arcuate object detection, improved detection speed and quality, variable image sizes, memory consumption, small-size object detection and localization, and end-to-end training possibilities. Future common challenges are even better and more efficient detection frameworks (e.g. the research field of generic object detection is still far from complete), unsupervised and weakly supervised learning, network optimization and combination, video object detection, 3D object detection and multi-domain object detection.

The two surveys about image segmentation (Garcia-Garcia et al., 2018; Minaee et al., 2020) review numerous deep learning architectures and variants, like CNN, AlexNet, VGG-16, GoogLeNet, ResNet, ReNet and further custom architectures. Garcia-Garcia et al. (2018) list the pros and cons for accuracy, efficiency, training, instance sequences, multi-modal and 3D. Minaee et al. (2020) consider the quantitative accuracy, speed (inference time), storage requirements (memory footprint) and further present a metrics evaluation for pixel accuracy/mean pixel accuracy (MPA), intersection over union (IoU)/Jaccard Index and Dice coefficient. Both surveys see future challenges in more memory efficient models, real-time segmentations and diverse datasets, like 3D datasets, sequence datasets, and in general more challenging datasets. Both also point out point cloud segmentation. Further challenges are for example execution time, accuracy, reproducibility and weakly-supervised and unsupervised learning.

The three surveys in face recognition (Masi et al., 2018; Li & Deng, 2020; Wang & Deng, 2018) have all a strong focus on datasets and loss functions. A reason for that is that there exist many large public facial databases, which make comparable evaluations among the methods feasible. In this regard, Li & Deng (2020) focus on databases and evaluations specifically for Facial Expression Recognition (FER) in their survey. However, the surveys see the common challenges in boarded databases, handling biases in large datasets, like ethnicity, gender, age or other factors, having more non-saturated benchmark datasets and ubiquitous face recognition across applications and scenes. Further open challenges are privacy and security issues, pursuing extreme accuracy and efficiency, understanding deep face recognition and fusion issues. Finally, Masi et al. (2018) state reducing intra-class variance and increasing the margin between classes while training as two main challenges, and that video processing and clustering are currently the next frontiers for face recognition.

Table 3 presents two surveys in Action/motion recognition (Herath, Harandi & Porikli, 2017; Wang et al., 2018b), the latter one specific on RGB-D-based human motion recognition with deep learning. Both surveys present numerous deep learning-based methods, like VGG, Decaf, RNN, LSTM, LRCN, autoencoder, adversarial models and others, and present accuracy evaluations on numerous public available benchmark datasets. Common challenges of the surveys point out the need for practical (carefully engineered) systems that can handle more realistic activities and (outdoor) real-life scenarios. Further challenges are the development of more generic architectures that can handle also multiple modalities, have a better performance and can better train video data. Moreover, the surveys point out a deeper understanding in action recognition, better results on smaller training data, but also better results on large complex datasets, solutions for online motion recognition and prediction, and zero/one-shot learning.